The first version of the .NET Framework (1.0) was released in 2002 to much enthusiasm. The latest version, the .NET Framework 2.0, was introduced in 2005 and is considered a major release of the framework.

With each release of the framework, Microsoft has always tried to ensure that there were minimal breaking changes to code developed. Thus far, they have been very successful at this goal.

Make sure that you create a staging server to completely test the upgrade of your applications to the .NET Framework 2.0 as opposed to just upgrading a live application.

The following details some of the changes that are new to the .NET Framework 2.0 as well as new additions to Visual Studio 2005 — the development environment for the .NET Framework 2.0.

SQL Server integration

After a long wait, the latest version of SQL Server has finally been released. This version, SQL Server 2005, is quite special in so many ways. Most importantly for the .NET developer is that SQL Server 2005 is now hosting the CLR. Microsoft has developed their .NET offering for developers so that the .NET Framework 2.0, Visual Studio 2005 and SQL Server 2005 are all now tied together — meaning that these three products are now released in unison. This is quite important as it is rather well known that most applications built use all three of these pieces and that they all need to be upgraded together in such a way that they work with each other in a seamless manner.

Due to the fact that SQL Server 2005 now hosts the CLR, this means that you can now avoid building database aspects of your application using the T-SQL programming language. Instead, you can now build items such as your stored procedures, triggers and even data types in any of the .NET-compliant languages, such as C#.

SQL Server Express is the 2005 version of SQL Server that replaces MSDE. This version doesn't have the strict limitations MSDE had.

64-Bit support

Most programming today is done on 32-bit machines. It was a monumental leap forward in application development when computers went from 16-bit to 32-bit. More and more enterprises are moving to the latest and greatest 64-bit servers from companies such as Intel (Itanium chips) and AMD (x64 chips) and the .NET Framework 2.0 has now been 64-bit enabled for this great migration.

Microsoft has been working hard to make sure that everything you build in the 32-bit world of .NET will run in the 64-bit world. This means that everything you do with SQL Server 2005 or ASP.NET will not be affected by moving to 64-bit. Microsoft themselves made a lot of changes to the CLR in order to get a 64-bit version of .NET to work. Changes where made to things such as garbage collection (to handle larger amounts of data), the JIT compilation process, exception handling, and more.

Moving to 64-bit gives you some powerful additions. The most important (and most obvious reason) is that 64-bit servers give you a larger address space. Going to 64-bit also allows for things like larger primitive types. For instance, an integer value of 2^32 will give you 4,294,967,296 — while an integer value of 2^64 will give you 18,446,744,073,709,551,616. This comes in quite handy for those applications that need to calculate things such as the U.S. debt or other high numbers.

Companies such as Microsoft and IBM are pushing their customers to take a look at 64-bit. One of the main areas of focus are on database and virtual storage capabilities as this is seen as an area in which it makes a lot of sense to move to 64-bit for.

Visual Studio 2005 can install and run on a 64-bit computer. This IDE has both 32-bit and 64-bit compilers on it. One final caveat is that the 64-bit .NET Framework is meant only for Windows Server 2003 SP1 or better as well as other 64-bit Microsoft operating systems that might come our way.

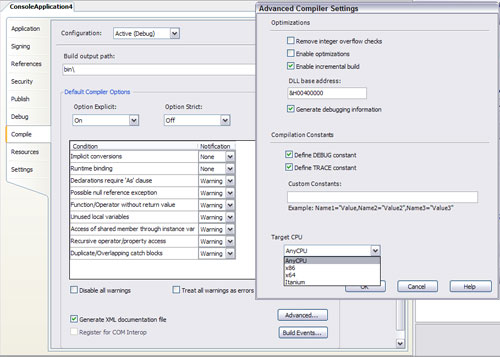

When you build your applications in Visual Studio 2005, you can change the build properties of your application so that it compiles specifically for 64-bit computers. To find this setting, you will need to pull up your application's properties and click on the Build tab from within the Properties page. On the Build page, click on the Advanced button and this will pull up the Advanced Compiler Setting dialog. From this dialog, you can change the target CPU from the bottom of the dialog. From here, you can establish your application to be built for either an Intel 64-bit computer or an AMD 64-bit computer. This is shown here in Figure 1.

Figure 1: Building your application for 64-bit

Generics

In order to make collections a more powerful feature and also increase their efficiency and usability, generics were introduced to the .NET Framework 2.0. This introduction to the underlying framework means that languages such as C# and Visual Basic 2005 can now build applications that use generic types. The idea of generics is nothing new. They look similar to C++ templates but are a bit different. You can also find generics in other languages, such as Java. Their introduction into the .NET Framework 2.0 languages is a huge benefit for the user.

Generics enable you to make a generic collection that is still strongly typed — providing fewer chances for errors (because they occur at runtime), increasing performance, and giving you Intellisense features when you are working with the collections.

To utilize generics in your code, you will need to make reference to the System.Collections.Generic namespace. This will give you access to generic versions of the Stack, Dictionary, SortedDictionary, List and Queue classes. The following demonstrates the use of a generic version of the Stack class:

void Page_Load(object sender, EventArgs e)

{

System.Collections.Generic.StackmyStack =

New System.Collections.Generic.Stack();

myStack.Push("St. Louis Rams");

myStack.Push("Indianapolis Colts");

myStack.Push("Minnesota Vikings");

Array myArray;

myArray = myStack.ToArray();

foreach(string item in myArray)

{

Label1.Text += item + "

";

}

}

In the above example, the Stack class is explicitly cast to be of type string. Here, you specify the collection type with the use of brackets. This example, casts the Stack class to type string using Stack. If you wanted to cast it to something other than a Stack class of type string (for instance, int), then you would specify Stack.

Because the collection of items in the Stack class is cast to a specific type immediately as the Stack class is created, the Stack class no longer casts everything to type object and then later (in the foreach loop) to type string. This process is called boxing, and it is expensive. Because this code is specifying the types up front, the performance is increased for working with the collection.

Anonymous methods

Anonymous methods enable you to put programming steps within a delegate that you can then later execute instead of creating an entirely new method. For instance, if you were not using anonymous methods, you would use delegates in a manner similar to the following:

public partial class Default_aspx

{

void Page_Load(object sender, EventArgs e)

{

this.Button1.Click += ButtonWork;

}

void ButtonWork(object sender, EventArgs e)

{

Label1.Text = "You clicked the button!";

}

}

But using anonymous methods, you can now put these actions directly in the delegate as shown here in the following example:

public partial class Default_aspx

{

void Page_Load(object sender, EventArgs e)

{

this.Button1.Click += delegate(object

myDelSender, EventArgs myDelEventArgs)

{

Label1.Text = "You clicked the button!";

};

}

}

When using anonymous methods, there is no need to create a separate method. Instead you place the necessary code directly after the delegate declaration. The statements and steps to be executed by the delegate are placed between curly braces and closed with a semicolon.

Nullable types

Due to the fact that generics has been introduced into the underlying .NET Framework 2.0, it is now possible to create nullable value types — using System.Nullable. This is ideal for situations such as creating sets of nullable items of type int. Before this, it was always difficult to create an int with a null value from the get-go or to later assign null values to an int.

To create a nullable type of type int, you would use the following syntax:

System.Nullablex = new System.Nullable ;

There is a new type modifier that you can also use to create a type as nullable. This is shown in the following example:

int? salary = 800000

This ability to create nullable types is not a C#-only item as this ability was built into .NET Framework itself and, as stated, is there due to the existence of the new generics feature in .NET. For this reason, you will also find nullable types in Visual Basic 2005 as well.

Iterators

Iterators enable you to use foreach loops on your own custom types. To accomplish this, you need to have your class implement the IEnumerable interface as shown here:

using System;

using Systm.Collections;

public class myList

{

internal object[] elements;

internal int count;

public IEnumerator GetEnumerator()

{

yield return "St. Louis Rams";

yield return "Indianapolis Colts";

yield return "Minnesota Vikiings";

}

}

In order to use the IEnumerator interface, you will need to make a reference to the System.Collections namespace. With this all in place, you can then iterate through the custom class as shown here:

void Page_Load(object sender, EventArgs e)

{

myList IteratorList = new myList();

foreach(string item in IteratorList)

{

Response.Write(item.ToString() + "

");

}

}

Partial Classes

Partial classes are a new feature to the .NET Framework 2.0 and again C# takes advantage of this addition. Partial classes allow you to divide up a single class into multiple class files, which are later combined into a single class when compiled.

To create a partial class, you simply need to use the partial keyword for any classes that are to be joined together with a different class. The partial keyword precedes the class keyword for the classes that are to be combined with the original class. For instance, you might have a simple class called Calculator as shown here:

public class Calculator

{

public int Add(int a, int b)

{

return a + b;

}

}

From here, you can create a second class that attaches itself to this first class as shown here in the following example:

public partial class Calculator

{

public int Subtract(int a, int b)

{

return a - b;

}

}

When compiled, these classes will be brought together into a single Calculator class instance as if they were built together to begin with.

Where C# Fits In

In one sense, C# can be seen as being the same thing to programming languages as .NET is to the Windows environment. Just as Microsoft has been adding more and more features to Windows and the Windows API over the past decade, Visual Basic 2005 and C++ have undergone expansion. Although Visual Basic and C++ have ended up as hugely powerful languages as a result of this, both languages also suffer from problems due to the legacies of how they have evolved.

In the case of Visual Basic 6 and earlier versions, the main strength of the language was the fact that it was simple to understand and didn't make many programming tasks easy, largely hiding the details of the Windows API and the COM component infrastructure from the developer. The downside to this was that Visual Basic was never truly object-oriented, so that large applications quickly became disorganized and hard to maintain. As well, because Visual Basic's syntax was inherited from early versions of BASIC (which, in turn, was designed to be intuitively simple for beginning programmers to understand, rather than to write large commercial applications), it didn't really lend itself to well-structured or object-oriented programs.

C++, on the other hand, has its roots in the ANSI C++ language definition. It isn't completely ANSI-compliant for the simple reason that Microsoft first wrote its C++ compiler before the ANSI definition had become official, but it comes close. Unfortunately, this has led to two problems. First, ANSI C++ has its roots in a decade-old state of technology, and this shows up in a lack of support for modern concepts (such as Unicode strings and generating XML documentation), and for some archaic syntax structures designed for the compilers of yesteryear (such as the separation of declaration from definition of member functions). Second, Microsoft has been simultaneously trying to evolve C++ into a language that is designed for high-performance tasks on Windows, and in order to achieve that they've been forced to add a huge number of Microsoft-specific keywords as well as various libraries to the language. The result is that on Windows, the language has become a complete mess. Just ask C++ developers how many definitions for a string they can think of: char*, LPTSTR, string, CString (MFC version), CString (WTL version), wchar_t*, OLECHAR*, and so on.

Now enter .NET —a completely new environment that is going to involve new extensions to both languages. Microsoft has gotten around this by adding yet more Microsoft-specific keywords to C++, and by completely revamping Visual Basic into Visual Basic .NET into Visual Basic 2005, a language that retains some of the basic VB syntax but that is so different in design that we can consider it to be, for all practical purposes, a new language.

It's in this context that Microsoft has decided to give developers an alternative — a language designed specifically for .NET, and designed with a clean slate. Visual C# 2005 is the result. Officially, Microsoft describes C# as a "simple, modern, object-oriented, and type-safe programming language derived from C and C++." Most independent observers would probably change that to "derived from C, C++, and Java." Such descriptions are technically accurate but do little to convey the beauty or elegance of the language. Syntactically, C# is very similar to both C++ and Java, to such an extent that many keywords are the same, and C# also shares the same block structure with braces ({}) to mark blocks of code, and semicolons to separate statements. The first impression of a piece of C# code is that it looks quite like C++ or Java code. Beyond that initial similarity, however, C# is a lot easier to learn than C++, and of comparable difficulty to Java. Its design is more in tune with modern developer tools than both of those other languages, and it has been designed to give us, simultaneously, the ease of use of Visual Basic, and the high-performance, low-level memory access of C++ if required. Some of the features of C# are:

- Full support for classes and object-oriented programming, including both interface and implementation inheritance, virtual functions, and operator overloading.

- A consistent and well-defined set of basic types.

- Built-in support for automatic generation of XML documentation.

- Automatic cleanup of dynamically allocated memory.

- The facility to mark classes or methods with user-defined attributes. This can be useful for documentation and can have some effects on compilation (for example, marking methods to be compiled only in debug builds).

- Full access to the .NET base class library, as well as easy access to the Windows API (if you really need it, which won't be all that often).

- Pointers and direct memory access are available if required, but the language has been designed in such a way that you can work without them in almost all cases.

- Support for properties and events in the style of Visual Basic.

- Just by changing the compiler options, you can compile either to an executable or to a library of .NET components that can be called up by other code in the same way as ActiveX controls (COM components).

- C# can be used to write ASP.NET dynamic Web pages and XML Web services.

Most of the above statements, it should be pointed out, do also apply to Visual Basic 2005 and Managed C++. The fact that C# is designed from the start to work with .NET, however, means that its support for the features of .NET is both more complete, and offered within the context of a more suitable syntax than for those other languages. While the C# language itself is very similar to Java, there are some improvements, in particular, Java is not designed to work with the .NET environment.

Before we leave the subject, we should point out a couple of limitations of C#. The one area the language is not designed for is time-critical or extremely high performance code — the kind where you really are worried about whether a loop takes 1,000 or 1,050 machine cycles to run through, and you need to clean up your resources the millisecond they are no longer needed. C++ is likely to continue to reign supreme among low-level languages in this area. C# lacks certain key facilities needed for extremely high performance apps, including the ability to specify inline functions and destructors that are guaranteed to run at particular points in the code. However, the proportions of applications that fall into this category are very low.